Human Error Modelling was a project at the University of London concerned with formal modelling of human cognition to improve user interfaces. Though that project ended in 2008, related work continues. An earlier paper by Paul Curzon and Ann Blandford gives a good idea of what the research is about. Here, I summarize their example, and use the same example to illustrate a limitation and pitfall of the approach.

The paper's title, "Using a Verification System to Reason about Post-Completion Errors" [pdf], refers to a type of error that a bank customer might make when getting money from an automated teller machine: on receiving the cash, the person walks away, forgetting their card is still in the bank machine. To prevent users from making this mistake, ATMs are now designed to dispense the cash only after the card has been removed. Forcing this order on customers works because they are less likely to forget the cash than their card: getting the cash is the primary goal of a customer going to an ATM, while recovering the card is a secondary objective that arises only because the machine requires the card to be inserted before the withdrawal can be made.

Verification systems are approaches designed to check that a modelled system fulfills certain requirements, for example, that a set of traffic lights can never enter a state where intersecting streams of traffic both have a green light. This does not refer to testing, which might miss some obscure set of circumstances in which the system fails, but to something more rigorous: theorem proving or model checking, where a computer assists in demonstrating mathematically that the problem cannot occur.

What Curzon and Blandford explore is the possibility of extending the model of the system to include a cognitive model of a user susceptible to post-completion errors – or, more generally, any kind of error the cognitive basis for which can plausibly be reproduced. (Formal user modelling is not in itself original; see the paper for references.) It can then be verified – with, for example, a model checker – that the combined model of the user interacting with the electronic system cannot enter a state where the user's main goal has been achieved but an incidental, forgettable goal has not.

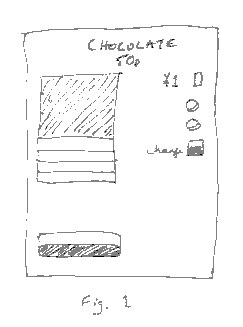

The toy example used to demonstrate the idea is a vending machine analogous to the ATM described above. The machine accepts a £1 coin and dispenses a chocolate bar and 50p change. The problem is that the user may press the button to get the chocolate bar, but forget to press a separate button required to release the change.

Much of the paper is concerned with the technical details of modelling the vending machine and the user in a form amenable to processing with the HOL (Higher Order Logic) theorem prover. Briefly, the system is a finite state machine (FSM), which responds to the user actions of inserting a coin and pressing the buttons. The user provides these stimuli while rationally seeking the goal state in which a bar of chocolate has been obtained. A desired invariant is the value of the user's possessions: valuing a bar at 50p, this should be the same after using the vending machine as before it. However, the attempt to prove this invariant fails, because the chocolate bar can be taken before the change is dispensed, and the modeled user is vulnerable to making the post-completion error.

The solution, of course, is not to blame humans for being vulnerable to human error, but to redesign the vending machine so that the change is dispensed first, ensuring that nothing remains to be done after the user's main goal has been satisfied. Provided with the altered design expressed in higher order logic, software is used to help formally prove that the post-completion error can no longer occur.

There is obvious value in an approach which may be generalized to a user model which reproduces our vulnerability to a variety of known cognitive errors, such as mode errors, which afflict our interactions with machines: it means that tools like model checkers may be used to check, through an automatic and comprehensive exploration of the possible interactions, not only that the machine's behaviour is correct with respect to formal or technical requirements, but also that it robustly guards against certain classes of error that its human users may make.

There is another advantage, not mentioned by the authors: the objective codification of knowledge regarding potential sources of human error. Those with an input into decisions about a user interface, who frequently may lack any specialist knowledge, can vehemently differ about how it should work, and it is easy to conceive of a situation where somebody pours scorn on a claim that a particular design is more likely to lead to error, or denies the problem's importance in some other way. In such a case, the claim is given the additional authority needed to shorten arguments when it is supported by the results of an automated check based on pre-existing, externally-supplied standards. This advantage applies even where the problem is obvious to a designer before the software check is done - that is, even in "toy" examples, hardly more complex than Curzon and Blandford's vending machine.

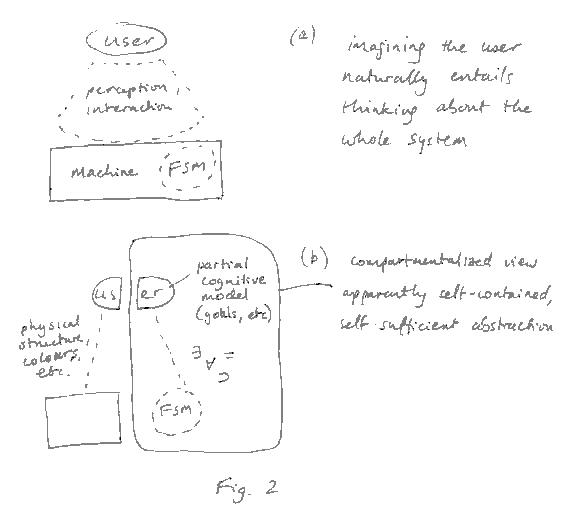

It is interesting, however, to note a potential pitfall of the approach, which may best be seen by a schematic representation of how it changes the way the user interface development activity may be imagined.

In the figure, (a) is a simple image of what is normally going on during user interface design: the user is imagined as human being interacting with the system. The behaviour of the system may wholly or partly be specified more abstractly, for example, using a FSM, but whenever we imagine the user we also imagine the whole physical context of interaction.

Part (b) shows how the process described by Curzon and Blandford changes that: now a partial model of the user, embodying goals and vulnerable to certain cognitive errors, is abstracted – allowing the interaction with the abstract FSM to be managed in a compartmentalized way. The richness of the physical environment and the way we respond and interact with it as humans is omitted.

While the capacity to step outside the abstract, mathematical model has obviously not been lost, it would be natural for an interaction designer to stay in the narrower frame from which supposedly inessential details have been omitted. To put it another way: we find the post-completion error by considering the mathematical model of user interaction, and, in the same frame, we find the solution: change the machine's FSM so that the user's change is dispensed first. Problem solved.

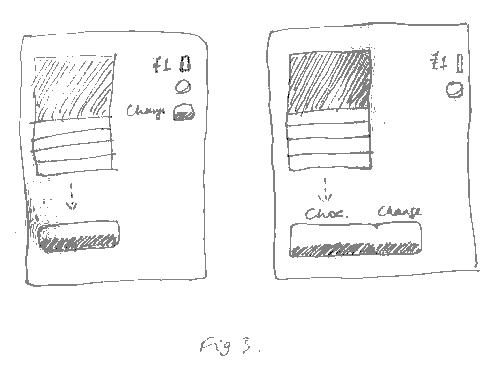

But if we consider the broader context, another solution might occur to us: change the vending machine so that the chocolate bar and the 50p change are dispensed into adjacent cubbyholes. Now, even if the chocolate appears first, it cannot be taken from the machine without the user having a natural reminder – from the empty tray marked "change" immediately beside it – that the transaction is not complete.

It could be argued that the user might erroneously assume that no change was due (though that would be different from a post-completion error), or that the adjacent tray design might only make the post-completion error less likely, rather than completely avoiding it.

Consider, then, a variation in which the 50p change and the chocolate bar are simultaneously deposited on the trays. In the original design, this does not really guard against the post-completion error: the user, looking at the chocolate in the cubbyhole at the bottom of the machine, might take this and leave the change. In the altered design, where the chocolate and the change appear together as well as simultaneously, it is far more difficult to imagine the post-completion error being made.

One might still reject the hypothesis that the narrowed mathematical frame of the model makes one less likely to come up with solutions outside it. In that case, it is at least a plausible hypothesis worth testing, perhaps by a research experiment.

And there's another issue: the only difference between one possible design which avoids the post-completion error and one which does not is that the former uses the same or adjacent trays for the chocolate and the change, and the latter does not. But the physical structure of the machine is not part of the mathematical model – which means that the abstract is not faithful to reality in one of the two cases.

Can't we just extend the mathematical model to incorporate such factors as the physical structure of the machine and the width of the field of vision of someone standing in front of it? Perhaps – but if you're are just now wondering about the details of how that might be done in higher order logic then you probably also appreciate something else: we've reached a stage where the designer has by creative insight actually solved a problem in practice, but faces substantial difficulties solving it in theory.

In summary then, the abstraction of a simplified formal model of certain cognitive functions from the user is a trickier proposition than it might appear, even after one has seen how it can be done. Similarly, user interaction design is a holistic activity: when we come up with new boxes which systematize parts of what we do, we may also need to be careful not to get stuck in them. In human-centred design, the designer is human too.

— Michael Breen, 2010.4.16